'Breaking Nvidia Monopoly: How GLM-Image and Huawei''s Ascend Chip Topped the Global AI Charts'

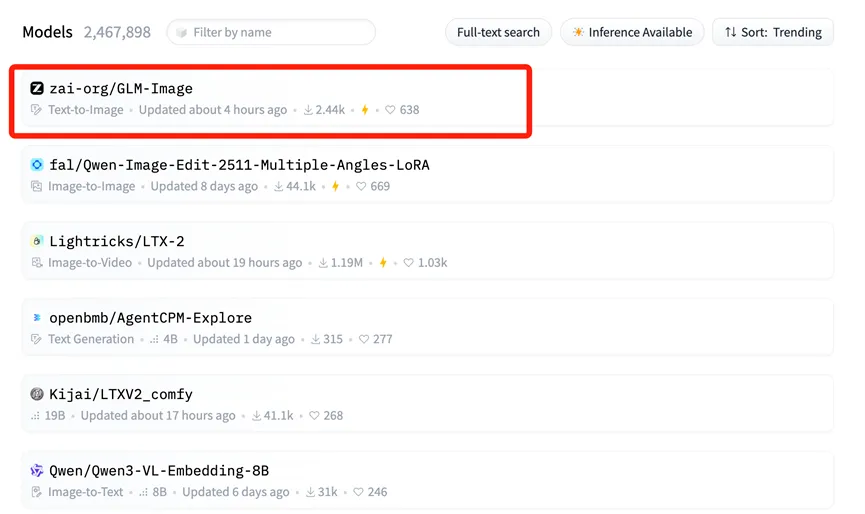

On January 14, a seismic shift occurred in the global artificial intelligence landscape, catching the attention of both industrial players and capital markets worldwide. GLM-Image, a multimodal image generation model jointly developed by Zhipu AI and Huawei, ascended to the number one spot on the Hugging Face Trending list.

For the uninitiated, Hugging Face is essentially the "World Expo" of open-source models—a central hub where international giants and developers alike showcase their best AI tools. Topping its Trending list is akin to taking center stage at the world's premier tech conference, signifying international recognition of GLM-Image's technical prowess and application value.

U.S. media outlet CNBC noted that this advanced model, trained by Zhipu and Huawei, effectively "breaks the myth" of reliance on U.S. chips. This achievement is not accidental; it is the inevitable result of deep "software-hardware synergy" and a breakthrough across the entire domestic AI industrial chain in China.

The "Full-Stack" Foundation: Huawei Ascend & MindSpore

The critical support behind this achievement is the domestic computing power foundation built by Huawei.

Unlike most previous AI models that relied heavily on foreign GPUs (primarily Nvidia) for training, GLM-Image ran its entire lifecycle—from data preprocessing to massive-scale training—on Huawei's Ascend 800T A2 chips and the MindSpore AI framework.

This fully autonomous "hardware + framework" combination is the real story here. It addresses the core "chokepoint" problem in AI development, proving that training state-of-the-art (SOTA) models is possible without relying on the CUDA ecosystem. The Ascend 910B series (which powers the 800T A2) has demonstrated formidable performance in large cluster environments, offering a viable alternative for the global open-source community.

Deconstructing the Architecture: Why AR + Diffusion Matters

Zhipu AI also achieved significant innovation in the model's architecture. GLM-Image departed from the standard technical routes used by many Western open-source models.

Instead, it utilizes a hybrid "Autoregressive (AR) + Diffusion Decoder" architecture.

- The "Brain" (Autoregressive): A 9B parameter AR model handles understanding complex instructions, layout planning, and text generation within images.

- The "Painter" (Diffusion): A 7B parameter diffusion model acts as the decoder, filling in high-fidelity details based on the AR model's blueprint.

This approach solves a notorious pain point in AI image generation: rendering accurate text. Previously, AI-generated images often featured garbled, unreadable text. Thanks to the AR component's strong cognitive capabilities, GLM-Image achieved the highest accuracy in Chinese character generation among open-source models.

This technical path—prioritizing cognitive understanding before generation—mirrors the approach seen in advanced cognitive reasoning models like Nano Banana Pro, which centers on "knowledge + reasoning" to handle complex tasks with greater precision than standard generative models.

Market Reaction: The Rise of Knowledge Atlas (2513.HK)

The "gold standard" value of topping the global chart was immediately reflected in capital market reactions. When news of GLM-Image's open-sourcing first broke, the stock price of Zhipu AI's parent entity, Knowledge Atlas (2513.HK), surged over 16% in a single day. Investors clearly recognized the long-term value of the "domestic chip + autonomous model" combination.

In fact, since listing on the Hong Kong Stock Exchange on January 8 as the "first global large model stock," Knowledge Atlas has seen its share price increase by over 100%.

Democratizing AI Design: Open Source for All

From a long-term perspective, GLM-Image's success is driven by the synergy of an entire industrial chain. This full-chain capability doesn't just serve tech giants; it significantly lowers barriers for small and medium-sized enterprises (SMEs).

With inference costs as low as RMB 0.1 (approx. $0.01 USD) per image, GLM-Image allows businesses to utilize top-tier AI design tools at a fraction of traditional costs.

Today, the open-source code and weights for GLM-Image are available synchronously on both GitHub and Hugging Face. Developers worldwide can now freely use this "fully autonomous solution," breaking the traditional narrative that cutting-edge model training depends solely on US silicon.

Kling 3.0 Released: The Ultimate Guide to Features, Pricing, and Access

Kling 3.0 is here! Explore the new integrated creative engine featuring 4K output, 15-second burst mode, and cinematic visual effects. Learn how to access it today.

I Tested Kling 3.0 Omni: 15s Shots, Native Audio, and The Truth About Gen-4.5

Is Kling 3.0 Omni the Runway Gen-4.5 killer? I spent 24 hours testing the native 15-second generation, lip-sync accuracy, and multi-camera controls. Here is the verdict.

Kimi k2.5 Released: The Ultimate Partner for Kling 2.6 Video Workflow

Kimi k2.5 is here with native video understanding and a 256k context window. Learn how to combine it with Kling 2.6 to automate your AI video production pipeline.

Z-Image Base vs Turbo: Mastering Chinese Text for Kling 2.6 Video

Learn how to use Z-Image Base and Turbo models to fix Chinese text rendering issues in Kling 2.6 videos. Complete workflow guide for commercial and artistic use cases.

'LTX-2 (LTX Video) Review: The First Open-Source "Audio-Visual" Foundation Model'

'Lightricks LTX-2 revolutionizes AI video: Native 4K, 50 FPS, synchronized audio, and runs on 16GB VRAM with FP8. Try it online or check the ComfyUI guide.'

'Seedance 1.5 Pro Review: ByteDance''s Audio-Visual Masterpiece with Perfect Lip-Sync'

'While LTX-2 opened the door, Seedance 1.5 Pro perfects it. Featuring native audio-visual generation, precise lip-sync, and complex camera control via Volcano Engine.'

'Z-Image Turbo Guide: Running Alibaba''s 6B Beast in ComfyUI (Vs. FLUX)'

'Forget 24GB VRAM. Alibaba''s Z-Image Turbo (6B) delivers photorealistic results and perfect Chinese text in just 8 steps. Here is your complete ComfyUI workflow guide.'

Google Veo 3.1 Review: The 4K, Vertical, and Consistent Video Revolution

Google Veo 3.1 brings native 4K upscaling, 9:16 vertical video, and identity consistency. Plus, a look at the leaked Veo 3.2 model code.