Google Veo 3.1 Review: The 4K, Vertical, and Consistent Video Revolution

Introduction

The AI video generation landscape has been plagued by three persistent challenges: resolution limitations, aspect ratio constraints, and character consistency issues. Google's Veo 3.1 addresses all three simultaneously, marking a significant milestone in the evolution of AI video technology.

Google Veo 3.1 represents Google's most ambitious video generation model to date, bringing native 4K resolution, 9:16 vertical video support, and groundbreaking identity consistency capabilities. These features collectively solve the most common pain points for content creators, filmmakers, and social media professionals.

In this comprehensive review, we will explore:

- How native 4K upscaling eliminates the need for external upscalers

- The impact of 9:16 vertical video on mobile-first content creation

- The revolutionary identity consistency engine for character locking

- A sneak peek at the leaked Veo 3.2 model code discovered by Bedros Pamboukian

The Clarity Revolution: Native 4K Upscaling

Breaking the Resolution Barrier

Previous AI video models typically maxed out at 1080p, requiring users to employ third-party upscalers to achieve 4K quality. Google Veo 3.1 changes this paradigm by offering native 4K output through its API, delivering unprecedented clarity and detail directly from the generation process.

Technical Implementation: The 4K capability is achieved through a multi-stage generation process that combines high-resolution latent diffusion with temporal coherence algorithms. Unlike simple upscaling, Veo 3.1's native 4K generation maintains consistent detail across frames, eliminating the artifacts and blurring that often plague post-processed upscaling.

File Size and Quality Considerations

One notable aspect of Veo 3.1's 4K output is the substantial file size. An 8-second 4K video can reach approximately 50MB, reflecting the high bitrate and quality preservation.

This file size indicates:

- High-quality compression: Efficient encoding that maintains visual fidelity

- Rich detail preservation: Minimal compression artifacts

- Professional workflow compatibility: Suitable for broadcast and cinematic applications

[!TIP] Optimizing 4K Workflow: When generating 4K content with Veo 3.1, consider your storage requirements and bandwidth limitations. The high-quality output comes with larger file sizes, so plan your storage strategy accordingly.

Mobile-First: Native 9:16 Vertical Generation

The End of Manual Cropping

For social media creators, the transition from landscape to vertical video has been a constant challenge. Traditional AI video generators primarily output 16:9 content, forcing creators to manually crop or use complex editing workflows to adapt content for platforms like TikTok, Instagram Reels, and YouTube Shorts.

Veo 3.1's 9:16 native support eliminates this friction by generating content specifically optimized for mobile consumption. The model understands vertical composition principles, ensuring that key visual elements remain centered and properly framed within the 9:16 aspect ratio.

Composition Intelligence

What sets Veo 3.1 apart is its understanding of vertical composition dynamics. The model automatically:

- Centers subjects within the vertical frame

- Optimizes text placement for mobile readability

- Maintains visual hierarchy in vertical space

This intelligent composition eliminates the guesswork from vertical content creation, allowing creators to focus on storytelling rather than technical adjustments.

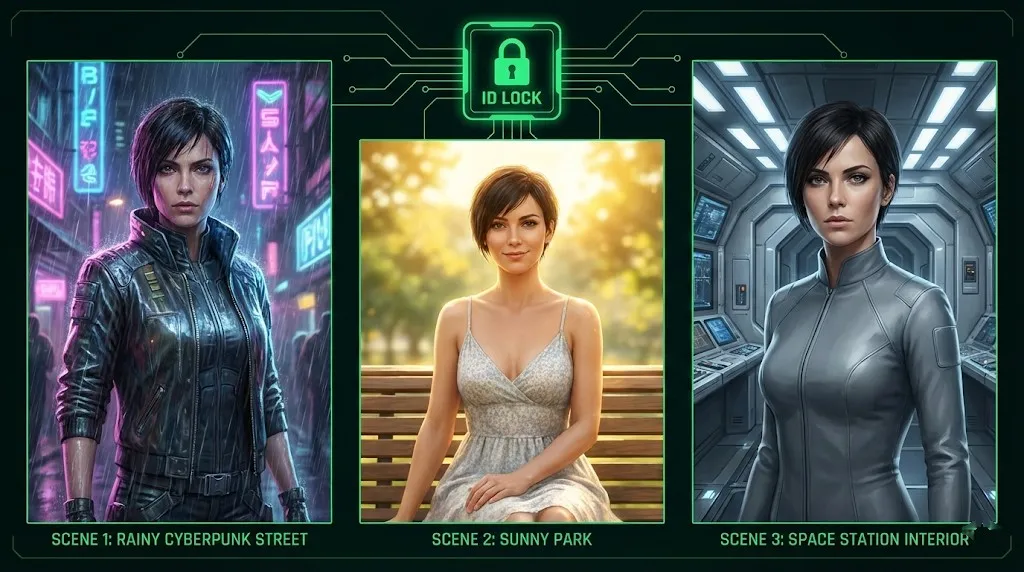

The Holy Grail: Identity Consistency

Solving the Character Consistency Problem

One of the most challenging aspects of AI video generation has been maintaining consistent character identity across different shots and scenes. Previous models often struggled with facial features, clothing details, and overall appearance consistency, limiting their usefulness for narrative content.

Veo 3.1's identity consistency engine introduces a breakthrough approach to this problem. By allowing users to upload multiple reference images of a character, the model can "lock" specific facial features, clothing elements, and physical characteristics across generated sequences.

How Identity Locking Works

The consistency system operates through key mechanisms:

- Multi-image reference processing: Users can upload multiple reference images (e.g., from different angles)

- Feature extraction and mapping: The model identifies and maps key facial landmarks

- Temporal coherence enforcement: Consistency is maintained across frames and scenes

This technology enables creators to:

- Generate multiple shots of the same character in different environments

- Maintain consistent appearance across different camera angles

- Create coherent narrative sequences with recurring characters

Workflow: From Static Image to 4K Vertical Video

End-to-End Production Pipeline

Combining Veo 3.1's three major features enables a streamlined production workflow that was previously impossible with AI video tools. Here's a theoretical workflow for creating professional vertical content:

Step 1: Character Preparation

- Gather high-quality reference images of your subject

- Ensure images show different angles and expressions

- Upload references to establish identity consistency

Step 2: Prompt Engineering

- Write detailed prompts including vertical composition cues

- Specify 4K resolution and 9:16 aspect ratio

- Include character consistency parameters

Step 3: Generation and Review

- Generate initial sequences

- Review for consistency and quality

- Make iterative improvements

Step 4: Final Output

- Export native 4K vertical video

- No additional upscaling or cropping required

- Ready for direct upload to social platforms

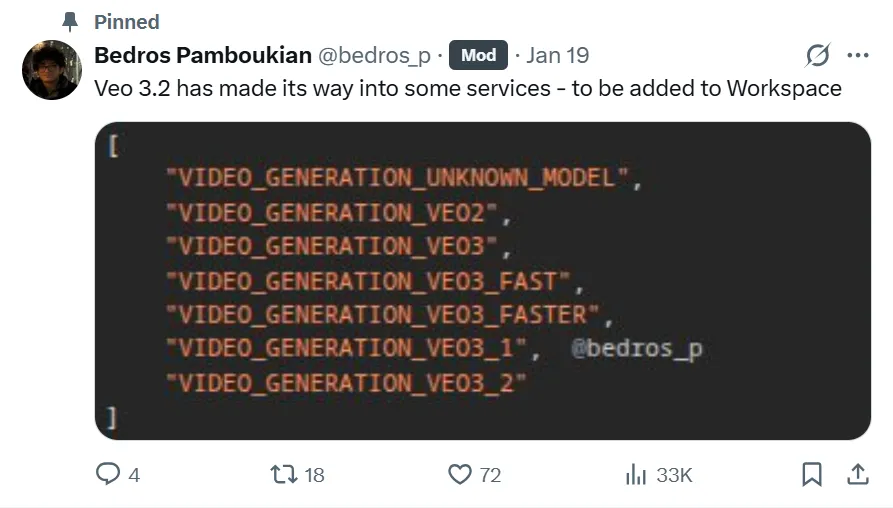

What's Next? A Sneak Peek at Veo 3.2

The Code Leak Discovery

While Veo 3.1 represents a significant advancement, evidence suggests Google is already working on the next iteration. Researcher Bedros Pamboukian recently discovered references to VIDEO_GENERATION_VE03 in Google's codebase, indicating active development of Veo 3.2.

Source: Bedros Pamboukian via X

What We Know (and What We Don't)

The discovery of VIDEO_GENERATION_VE03 confirms that Google is continuing to invest heavily in video generation technology. However, it's important to distinguish between confirmed facts and speculation:

Confirmed Information:

- Google is actively developing a new video generation model

- The internal codename suggests it's the successor to Veo 3.1

- Development is ongoing as of the discovery date

Unknown Factors:

- Specific feature improvements or new capabilities

- Release timeline and availability

- Pricing structure and accessibility

Responsible Speculation

Based on Google's development patterns and the evolution from Veo 1.0 to 3.1, we can reasonably expect Veo 3.2 to focus on enhanced temporal coherence and improved efficiency. However, it's crucial to emphasize that these are educated guesses based on industry trends, not confirmed features.

Conclusion

Google Veo 3.1 represents a watershed moment in AI video generation technology. By simultaneously addressing resolution limitations, aspect ratio constraints, and character consistency issues, Google has created a tool that genuinely meets the needs of professional content creators.

Key Takeaways:

- Native 4K eliminates upscaling dependencies, providing broadcast-quality output directly from generation

- 9:16 vertical video support streamlines mobile content creation workflows

- Identity consistency solves one of the most persistent challenges in AI video

- The discovered Veo 3.2 code indicates continued rapid innovation in this space

For creators who have been waiting for AI video technology to mature enough for professional applications, Veo 3.1 may well be the turning point. As the technology continues to evolve, we can expect even more sophisticated tools to emerge. But for now, Veo 3.1 stands as the most comprehensive solution available for high-quality, consistent AI video generation.

Kling 3.0 Released: The Ultimate Guide to Features, Pricing, and Access

Kling 3.0 is here! Explore the new integrated creative engine featuring 4K output, 15-second burst mode, and cinematic visual effects. Learn how to access it today.

I Tested Kling 3.0 Omni: 15s Shots, Native Audio, and The Truth About Gen-4.5

Is Kling 3.0 Omni the Runway Gen-4.5 killer? I spent 24 hours testing the native 15-second generation, lip-sync accuracy, and multi-camera controls. Here is the verdict.

Kimi k2.5 Released: The Ultimate Partner for Kling 2.6 Video Workflow

Kimi k2.5 is here with native video understanding and a 256k context window. Learn how to combine it with Kling 2.6 to automate your AI video production pipeline.

Z-Image Base vs Turbo: Mastering Chinese Text for Kling 2.6 Video

Learn how to use Z-Image Base and Turbo models to fix Chinese text rendering issues in Kling 2.6 videos. Complete workflow guide for commercial and artistic use cases.

'LTX-2 (LTX Video) Review: The First Open-Source "Audio-Visual" Foundation Model'

'Lightricks LTX-2 revolutionizes AI video: Native 4K, 50 FPS, synchronized audio, and runs on 16GB VRAM with FP8. Try it online or check the ComfyUI guide.'

'Seedance 1.5 Pro Review: ByteDance''s Audio-Visual Masterpiece with Perfect Lip-Sync'

'While LTX-2 opened the door, Seedance 1.5 Pro perfects it. Featuring native audio-visual generation, precise lip-sync, and complex camera control via Volcano Engine.'

'Breaking Nvidia Monopoly: How GLM-Image and Huawei''s Ascend Chip Topped the Global AI Charts'

'On January 14, China''s GLM-Image, trained entirely on Huawei''s Ascend chips and MindSpore framework, hit #1 on Hugging Face Trending. This marks a pivotal moment for global open-source AI alternatives.'

'Z-Image Turbo Guide: Running Alibaba''s 6B Beast in ComfyUI (Vs. FLUX)'

'Forget 24GB VRAM. Alibaba''s Z-Image Turbo (6B) delivers photorealistic results and perfect Chinese text in just 8 steps. Here is your complete ComfyUI workflow guide.'