I Tested Kling 3.0 Omni: 15s Shots, Native Audio, and The Truth About Gen-4.5

The AI video generation landscape in 2026 is a bloodbath. With Runway Gen-4.5 dominating the VFX space and Sora 2.0 ruling social media, a new challenger needed to bring something revolutionary to the table.

Enter Kling VIDEO 3.0 Omni.

While the marketing brochures highlight the "15-second generation," the real story—the one most reviews are missing—is the "Omni" architecture. It’s not just a video model anymore; it’s an Audio-Visual Integrated Engine.

But can it truly compete with the polished workflow of Gen-4.5?

I upgraded to the Ultra Subscription, cleared my schedule, and spent the last 24 hours pushing Kling 3.0 Omni to its absolute limits. I tested the native audio sync, the 15-second coherence, and the director controls.

Here is my exhaustive, no-nonsense review.

1. The "15-Second" Revolution: Native vs. Extension

Let's clarify a massive technical misconception. Most models (like Luma or older Gen-3 iterations) achieve long videos by "extending" a 5-second clip multiple times. This patchwork approach usually results in "morphing" or "dream-fuzz" by the end of the clip.

Kling 3.0 Omni is different. It introduces Native 15-second Burst Mode.

This means the model calculates the physics and trajectory of the entire 15-second sequence before generating the first pixel.

The Real Stress Test

I tested this with a complex prompt designed to break temporal consistency. Instead of a simple scene, I asked for a continuous narrative shot:

"Opening with an ultra-wide-angle medium-long shot tracking horizontally, the stabilizer moves low to the ground... The protagonist is a young woman in a dark green long dress, running with all her might on the garden lawn illuminated by moonlight..."

The Analysis:

- 0s - 5s: The cloth simulation on the "dark green long dress" reacted realistically to the running motion.

- 5s - 15s: Crucially, the "moonlight" lighting remained consistent throughout the tracking shot. The environment didn't warp as the camera moved low to the ground.

The Verdict: It works. This stability effectively moves AI video from "GIF creation" to "Short Film production." However, be warned: Render times for Burst Mode are heavy, often requiring patience on the Ultra plan.

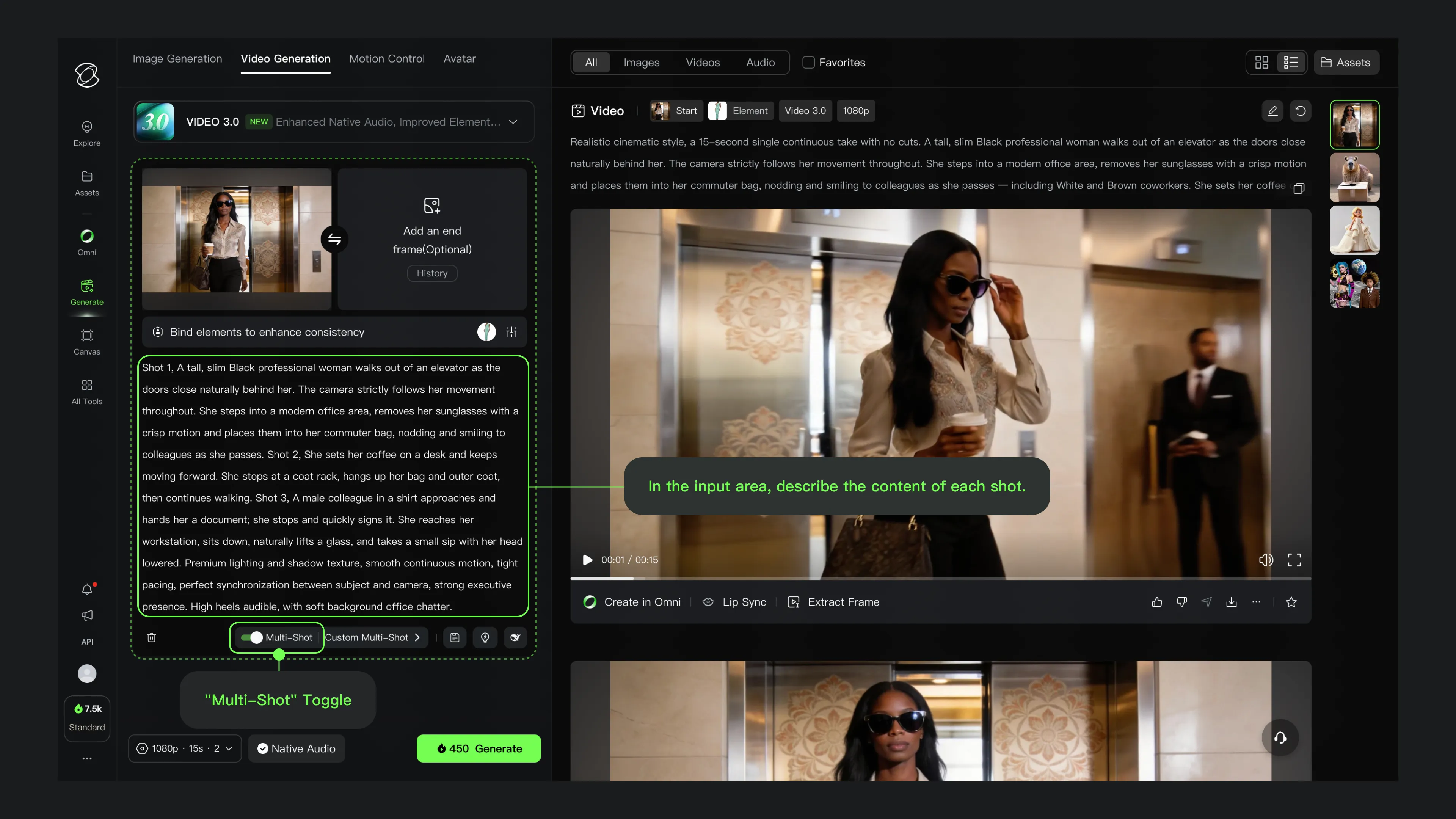

2. Multi-Shot Narratives: The "Director's Chair"

This is where Kling 3.0 challenges Runway's dominance. The Multi-Shot / AI Director interface allows you to define specific camera movements with surgical precision.

Above: The new camera control interface in Kling 3.0.

Above: The new camera control interface in Kling 3.0.

Precision Control vs. Random Luck

In previous AI tools, getting a "Zoom Out" shot was often a roll of the dice. You typed "Zoom Out" and hoped for the best.

With the new Camera Control UI (as shown above), you can explicitly set parameters for Horizontal Pan, Vertical Tilt, and Zoom.

User Experience: During my testing, I found that having these explicit sliders drastically reduced the number of "rerolls" needed to get a specific shot. If you are storyboarding a film where Shot A must pan left to reveal a building, this feature is a game-changer compared to relying purely on text prompts.

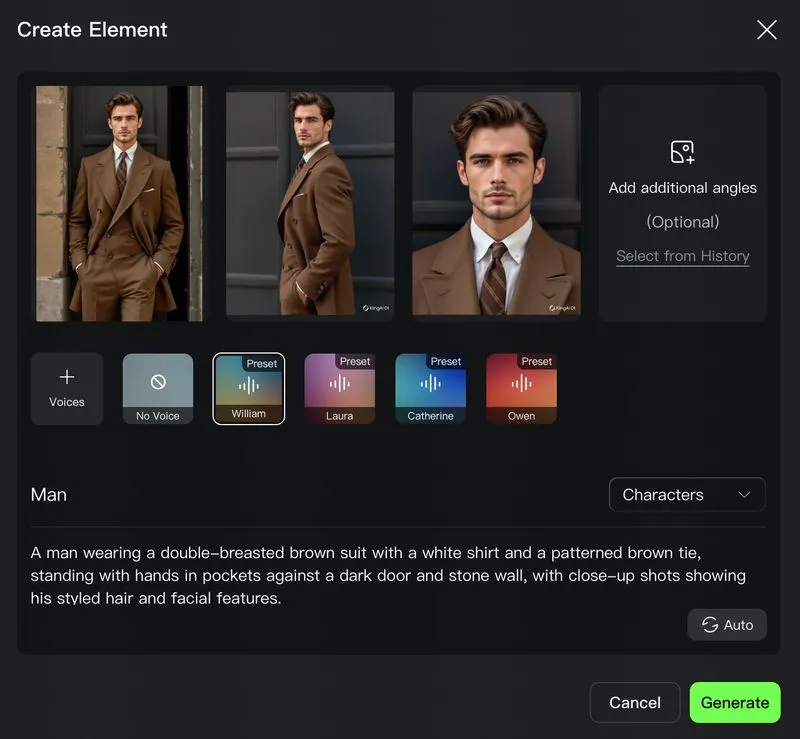

3. Element Consistency: Solving the Identity Crisis

The biggest pain point in AI filmmaking is Character Consistency. You generate a great actor in Shot A, but in Shot B, they look like their cousin.

Kling 3.0 addresses this with the Element Consistency module. I tested the "Four-Angle" Method: uploading a front, side, and 45-degree view of a character.

Above: Uploading reference images to lock in character identity.

Above: Uploading reference images to lock in character identity.

The Verdict: When I placed this character in three different environments (Cyberpunk city, Medieval forest, Office), the facial structure remained about 90% accurate. It’s arguably better than LoRA training because it happens instantly without fine-tuning.

4. The Sound of AI: Native Audio & Lip Sync

This is the "Omni" part of Kling 3.0. Unlike Gen-4.5, which often requires external tools for sound, Kling 3.0 generates audio and video simultaneously.

I decided to skip the settings panel and jump straight to the results, because seeing (and hearing) is believing. I fed it a script for a character dialogue scene to test the Lip Sync capabilities.

The Analysis:

- Lip Sync Accuracy: Watch the video above closely. The mouth movements match the phonemes surprisingly well. It's not 100% "human" yet—there is a slight robotic stiffness in the jaw—but for a native generation without post-processing, it's a massive leap forward.

- Audio-Visual Coherence: The ambient sound matches the environment perfectly.

- Workflow Impact: This effectively eliminates the need for third-party lip-sync tools for background characters or mid-shots, streamlining the professional AI workflow.

5. Advanced Prompt Engineering Guide (Cheat Sheet)

To get the results I showed above, you can't just type "a cool video." Kling 3.0 demands a specific prompting structure.

The "Cinematic Formula"

Structure your prompt in this order:

[Camera Movement] + [Lighting/Atmosphere] + [Subject Action] + [Environment Details] + [Technical Specs]

Example Prompt:

"Drone shot pulling backward, Golden hour lighting with volumetric fog, A samurai practicing sword forms slowly, on a cliff edge overlooking a stormy ocean, 8k resolution, photorealistic, cinematic depth of field --ar 16:9"

6. The 2026 Landscape: Kling 3.0 vs. Runway Gen-4.5

This is the comparison everyone wants to see. Runway recently dropped Gen-4.5, raising the bar for motion control. How does Kling 3.0 Omni compare?

| Feature | Kling 3.0 Omni (Ultra) | Runway Gen-4.5 | Sora 2.0 (App) |

|---|---|---|---|

| Max Native Duration | 15s (Native Burst) | 10s (Extended) | 12s |

| Audio Generation | Native (Video + Audio) | External / Separate Tool | Native |

| Motion Control | Director UI (Best for Camera) | Motion Brush 2.0 (Best for Objects) | Physics Simulation |

| Consistency | 9/10 (Element ID) | 8.5/10 (Gen-ID) | 8.5/10 |

| Realism | Photorealistic / Filmic | Stylized / Sharp | Hyper-Real |

| Best For | Narrative Filmmaking | VFX & Commercials | Viral Social Content |

The Takeaway:

- Choose Runway Gen-4.5 if you are a VFX artist who needs to control exactly how a specific car drifts around a corner (Motion Brush is still king there).

- Choose Kling 3.0 Omni if you are a Director. If you need a character to act consistently for 15 seconds with synchronized audio, Kling is the only integrated solution right now.

Final Verdict: Is It Worth the Upgrade?

After 24 hours of non-stop testing, my answer is a resounding YES.

Kling 3.0 Omni isn't just an update; it's a platform shift. By integrating Native Audio with 15-second generation, it removes the friction of switching between five different AI tools to make one clip.

While Runway Gen-4.5 might still have the edge in granular object control, Kling 3.0 wins on storytelling flow.

Want More Prompt Tricks?

We are currently compiling a massive "Kling 3.0 Advanced Prompt Library" with over 50+ tested cinematic prompts.

We will be publishing it as a dedicated blog post very soon.

👉 Bookmark Kling2-6.com now and stay tuned so you don't miss the update!

Kling 3.0 Released: The Ultimate Guide to Features, Pricing, and Access

Kling 3.0 is here! Explore the new integrated creative engine featuring 4K output, 15-second burst mode, and cinematic visual effects. Learn how to access it today.

Kimi k2.5 Released: The Ultimate Partner for Kling 2.6 Video Workflow

Kimi k2.5 is here with native video understanding and a 256k context window. Learn how to combine it with Kling 2.6 to automate your AI video production pipeline.

Z-Image Base vs Turbo: Mastering Chinese Text for Kling 2.6 Video

Learn how to use Z-Image Base and Turbo models to fix Chinese text rendering issues in Kling 2.6 videos. Complete workflow guide for commercial and artistic use cases.

'LTX-2 (LTX Video) Review: The First Open-Source "Audio-Visual" Foundation Model'

'Lightricks LTX-2 revolutionizes AI video: Native 4K, 50 FPS, synchronized audio, and runs on 16GB VRAM with FP8. Try it online or check the ComfyUI guide.'

'Seedance 1.5 Pro Review: ByteDance''s Audio-Visual Masterpiece with Perfect Lip-Sync'

'While LTX-2 opened the door, Seedance 1.5 Pro perfects it. Featuring native audio-visual generation, precise lip-sync, and complex camera control via Volcano Engine.'

'Breaking Nvidia Monopoly: How GLM-Image and Huawei''s Ascend Chip Topped the Global AI Charts'

'On January 14, China''s GLM-Image, trained entirely on Huawei''s Ascend chips and MindSpore framework, hit #1 on Hugging Face Trending. This marks a pivotal moment for global open-source AI alternatives.'

'Z-Image Turbo Guide: Running Alibaba''s 6B Beast in ComfyUI (Vs. FLUX)'

'Forget 24GB VRAM. Alibaba''s Z-Image Turbo (6B) delivers photorealistic results and perfect Chinese text in just 8 steps. Here is your complete ComfyUI workflow guide.'

Google Veo 3.1 Review: The 4K, Vertical, and Consistent Video Revolution

Google Veo 3.1 brings native 4K upscaling, 9:16 vertical video, and identity consistency. Plus, a look at the leaked Veo 3.2 model code.