Kling 2.6 Ultimate Guide: Mastering Motion Control, Lip Sync, and Model Download

Kling 2.6 represents a significant leap forward in AI video generation technology, offering unprecedented control over motion, synchronization, and visual quality. As the latest iteration of Kling's powerful video generation platform, Kling 2.6 introduces groundbreaking features that set new standards in the industry. Whether you're a content creator, developer, or AI enthusiast, understanding Kling 2.6's capabilities is essential for staying ahead in the rapidly evolving landscape of AI-powered video production.

This comprehensive Kling 2.6 guide will walk you through everything you need to know about leveraging the platform's advanced features, from mastering motion control to accessing the model locally. We'll explore practical applications, technical implementation details, and how Kling 2.6 compares to competing solutions in the market.

Why Kling 2.6 is a Game Changer in AI Video

The release of Kling 2.6 marks a pivotal moment in AI video generation, introducing capabilities that were previously thought impossible. The platform's enhanced architecture delivers superior temporal consistency, smoother motion transitions, and more accurate subject tracking compared to previous versions. Kling 2.6's improved understanding of spatial relationships and physics simulation results in videos that feel more natural and cinematic.

What sets Kling 2.6 apart is its focus on user control. While many AI video tools operate as black boxes, Kling 2.6 provides granular control over camera movements, subject behavior, and scene composition. This level of control makes it particularly valuable for professional video production workflows where creative direction and technical precision are paramount.

The platform's enhanced rendering engine supports higher resolution outputs (up to 1080p) with improved frame rates, making it suitable for professional applications ranging from marketing content to educational materials. Kling 2.6 also introduces advanced style transfer capabilities, allowing users to apply consistent visual aesthetics across multiple video generations.

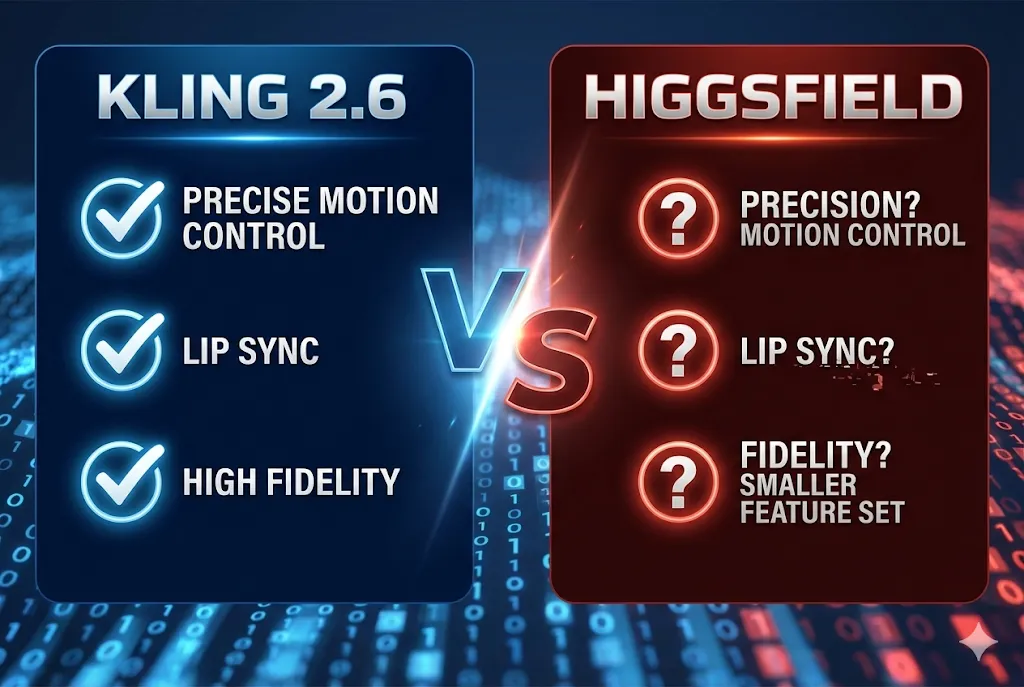

Kling 2.6 vs Unlimited Higgsfield: A Quick Comparison

When evaluating Kling 2.6 unlimited Higgsfield, several key differences emerge that influence which platform might be better suited for specific use cases. Kling 2.6 excels in providing fine-grained control over camera movements and subject motion, while Higgsfield Unlimited focuses more on automated generation with less user intervention.

In terms of output quality, Kling 2.6 demonstrates superior temporal coherence, especially in scenes with complex movements or multiple subjects. The platform's motion control features allow for precise camera work that closely mimics professional cinematography techniques. Higgsfield Unlimited, while capable of generating impressive results, often requires more iterations to achieve similar levels of control.

Another significant difference lies in the accessibility of the underlying models. Kling 2.6 offers more flexible deployment options, including local execution through Hugging Face integration, whereas Higgsfield Unlimited operates primarily through cloud-based services. This distinction is crucial for developers and organizations with specific data privacy or workflow integration requirements.

| Feature | Kling 2.6 | Higgsfield Unlimited |

|---|---|---|

| Motion Control Precision | High - Full parameter control over pan, tilt, zoom, dolly | Low - Limited automated camera movements |

| Lip Sync Availability | Yes - Advanced phoneme-based synchronization | No - Lip sync not available |

| Deployment Options | Both Local (Hugging Face) and Cloud | Cloud-only |

| Pricing Model | Free tier + Subscription plans | Subscription-only |

| Customization | High - Can modify model architecture | Low - Black-box solution |

| Video Resolution | Up to 1080p | Up to 720p |

| Batch Processing | Supported locally | Limited cloud processing |

Deep Dive into Kling Video 2.6 Motion Control

The motion control system in Kling video 2.6 motion control represents one of the platform's most powerful features. Unlike traditional video generation tools that produce static camera angles, Kling 2.6 enables sophisticated camera movements that add depth and dynamism to generated content. The motion control system operates on multiple parameters that can be adjusted independently or in combination to achieve specific cinematic effects.

At its core, the motion control system interprets user-defined parameters to generate smooth, natural-looking camera movements. These include pan (horizontal rotation), tilt (vertical rotation), zoom (focal length changes), and dolly (physical camera movement toward or away from subjects). Each parameter can be controlled with precision, allowing for complex multi-axis movements that would be challenging to achieve manually.

The system also includes intelligent motion prediction algorithms that anticipate subject movement and adjust camera behavior accordingly. This results in videos where the camera follows subjects naturally, maintaining proper framing and focus throughout the sequence. The motion control system's ability to understand scene composition and subject relationships makes it particularly effective for creating narrative-driven content.

Mastering the Camera Movement

To effectively use Kling 2.6 motion control, understanding the parameter ranges and their effects is essential. The pan parameter typically ranges from -45 to +45 degrees, with positive values rotating the camera to the right and negative values to the left. For subtle movements, values between -10 and +10 degrees work well, while more dramatic shots can use the full range.

The tilt parameter operates similarly, controlling vertical camera movement. Values between -15 and +15 degrees are ideal for establishing shots or revealing scenes gradually. Combining pan and tilt movements creates diagonal camera paths that can add visual interest and guide viewer attention through the scene.

Zoom parameters are expressed as multipliers of the base focal length. A value of 1.0 represents no zoom, while values above 1.0 zoom in and values below 1.0 zoom out. Smooth zoom transitions typically use gradual changes over the duration of the shot, with rates between 0.5x and 2.0x being most common for cinematic effects.

The dolly parameter controls physical camera movement toward or away from subjects. Positive values move the camera closer, creating a sense of intimacy or urgency, while negative values pull back, revealing more context. Combining dolly movements with zoom adjustments creates the classic "dolly zoom" effect popularized in films like "Vertigo."

For optimal results, the Kling 2.6 tutorial recommends starting with single-axis movements before combining multiple parameters. This approach helps users understand how each parameter affects the final output and allows for more precise control when creating complex camera movements.

Motion Control Parameter Quick Reference

| Parameter | Range | Best For... | Recommended Values |

|---|---|---|---|

| Pan | -45° to +45° | Horizontal scene exploration, following subjects | Subtle: -10° to +10°, Dramatic: -45° to +45° |

| Tilt | -30° to +30° | Vertical reveals, establishing shots, dramatic angles | Subtle: -15° to +15°, Extreme: -30° to +30° |

| Zoom | 0.5x to 3.0x | Focusing attention, creating tension, revealing details | Slow zoom: 0.8x to 1.2x, Dramatic: 1.5x to 2.5x |

| Dolly | -1.0 to +1.0 | Creating depth, intimate moments, expanding context | Subtle: -0.3 to +0.3, Strong: -0.8 to +0.8 |

Pro Tips:

- Combine Pan and Tilt for diagonal camera movements that guide viewer attention

- Use Dolly Zoom (Zoom + Dolly in opposite directions) for the classic "Vertigo effect"

- Start with single-axis movements before attempting complex multi-parameter combinations

- Test parameters with shorter video durations (3-5 seconds) before applying to longer sequences

Recommended Settings for Cinematic Shots

Here are some proven parameter combinations for achieving specific cinematic effects:

-

Drone Shot: Pan 0, Tilt -15, Zoom 0.8, Dolly 0.2

- Creates an aerial perspective with slight downward angle

- Perfect for establishing scenes and showing environment context

-

Dolly Zoom: Zoom 2.0 + Dolly -0.5

- Creates the famous "Vertigo effect" where the subject remains the same size while the background appears to stretch or compress

- Ideal for dramatic moments and psychological tension

-

Tracking Shot: Pan 15, Tilt 0, Zoom 1.0, Dolly 0.3

- Follows a subject moving horizontally through the frame

- Great for action sequences and character introductions

-

Reveal Shot: Pan 0, Tilt 0, Zoom 0.7, Dolly -0.4

- Gradually reveals more of the scene while pulling back

- Effective for surprise reveals and expanding narrative scope

Experience the New Feature: Kling 2.6 Lip Sync

One of the most anticipated features in Kling 2.6 is its advanced lip sync capability. Kling 2.6 lip sync technology uses sophisticated audio analysis and facial animation algorithms to synchronize generated video characters with spoken audio. This feature opens up numerous possibilities for content creators, from educational videos to marketing materials and entertainment content.

The lip sync system works by analyzing audio input to identify phonemes, prosody, and timing information. It then maps these audio characteristics to appropriate facial expressions and mouth movements, ensuring that the generated character's lip movements match the spoken words naturally. The system also accounts for co-articulation effects, where the pronunciation of one phoneme influences the articulation of adjacent phonemes, resulting in more realistic speech animation.

What makes Kling 2.6's lip sync particularly impressive is its ability to handle various languages and speaking styles. The system has been trained on diverse linguistic datasets, enabling it to produce accurate lip sync for multiple languages and dialects. Additionally, it can adapt to different speaking styles, from casual conversation to formal presentations, adjusting the animation accordingly.

The lip sync feature integrates seamlessly with other Kling 2.6 capabilities, including motion control and style transfer. This means users can create videos where characters not only speak naturally but also move through scenes with cinematic camera work and consistent visual styling.

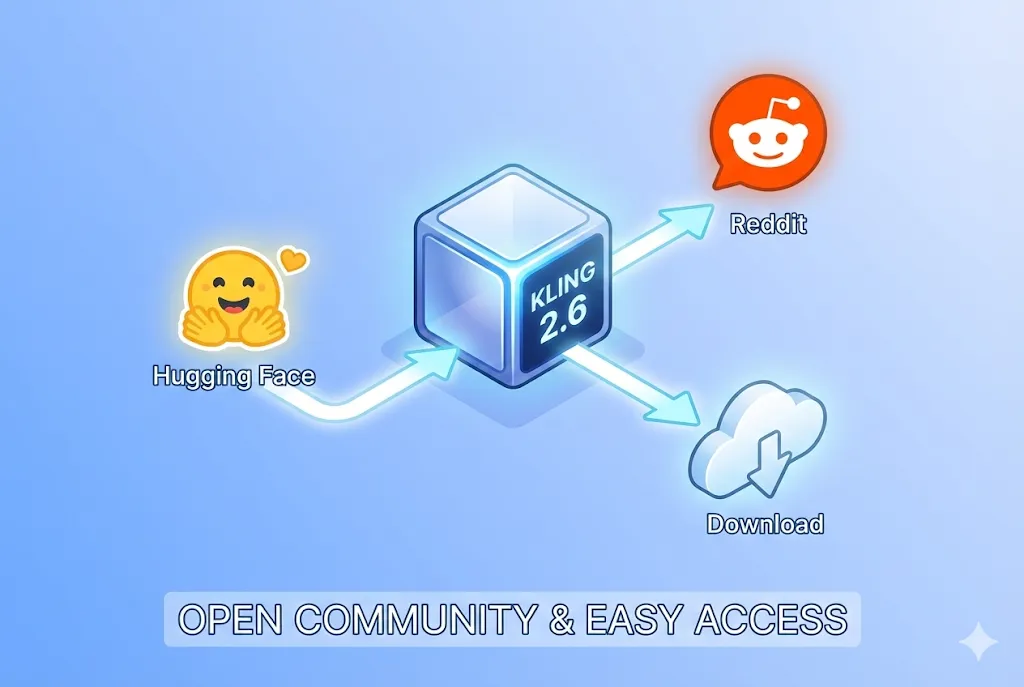

Kling 2.6 Model Download and Online Access

Accessing Kling 2.6 can be done through multiple channels, depending on your needs and technical expertise. The platform offers both online access through web interfaces and local deployment options for users who prefer to run the model on their own infrastructure. Understanding these options helps you choose the approach that best fits your workflow and requirements.

For quick access and experimentation, Kling 2.6 online provides a user-friendly web interface where you can generate videos without any technical setup. This option is ideal for users who want to explore the platform's capabilities or create occasional videos without investing in local hardware or software infrastructure. The online version includes all core features and receives regular updates with new capabilities.

For users requiring more control, privacy, or integration with existing workflows, Kling 2.6 model download options are available. The model can be downloaded and run locally, providing several advantages including offline operation, data privacy, and the ability to customize the implementation for specific use cases. This approach is particularly valuable for enterprises with strict data governance requirements or developers building applications on top of Kling 2.6.

Running Locally: Kling 2.6 on Hugging Face

For developers and technical users, deploying Kling 2.6 locally through Hugging Face provides maximum flexibility and control. Kling 2.6 hugging face integration allows you to download the model weights and run inference using familiar Hugging Face tools and libraries. This approach is ideal for integrating Kling 2.6 into existing ML pipelines or building custom applications.

Step-by-Step Local Deployment Guide

-

Install Dependencies

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118 pip install transformers diffusers accelerate safetensorsEnsure you have Python 3.8+ installed and CUDA-compatible GPU drivers.

-

Clone Repository

git clone https://huggingface.co/kling-ai/kling-2.6 cd kling-2.6This downloads the model configuration and necessary files.

-

Download Model Weights

huggingface-cli download kling-ai/kling-2.6 --local-dir ./modelsThe model weights are approximately 8GB in size. Ensure you have sufficient disk space and stable internet connection.

-

Run Inference

from transformers import AutoModelForVideoGeneration, AutoProcessor import torch # Load model model = AutoModelForVideoGeneration.from_pretrained( "./models", torch_dtype=torch.float16, device_map="auto" ) processor = AutoProcessor.from_pretrained("./models") # Generate video prompt = "A cinematic drone shot of a futuristic city at sunset" inputs = processor(prompt, return_tensors="pt") outputs = model.generate(**inputs, num_frames=60)

The local deployment requires substantial computational resources, including a powerful GPU with at least 16GB of VRAM for optimal performance. System requirements also include sufficient RAM (32GB recommended) and storage space for the model weights and temporary files during generation.

Running locally offers several advantages, including the ability to batch process multiple videos, integrate with custom preprocessing pipelines, and modify the model architecture for research purposes. However, it also requires technical expertise in machine learning and software development.

Troubleshooting Common Installation Issues

CUDA Out of Memory Errors

If you encounter CUDA out of memory errors during inference, try these solutions:

-

Reduce Batch Size: Lower the

num_framesparameter in your generation scriptoutputs = model.generate(**inputs, num_frames=30) # Reduced from 60 -

Enable Gradient Checkpointing: This trades computation time for memory savings

model.gradient_checkpointing_enable() -

Use Mixed Precision: Ensure you're loading the model with FP16

model = AutoModelForVideoGeneration.from_pretrained( "./models", torch_dtype=torch.float16, device_map="auto" ) -

Clear GPU Cache: Explicitly clear cache between generations

torch.cuda.empty_cache()

Python Dependency Conflicts

Dependency conflicts are common when working with ML libraries. To resolve them:

-

Use Virtual Environments: Always work in a clean virtual environment

python -m venv kling-env source kling-env/bin/activate # On Windows: kling-env\Scripts\activate -

Pin Specific Versions: Use exact version numbers for critical dependencies

pip install torch==2.1.0 torchvision==0.16.0 torchaudio==2.1.0 --index-url https://download.pytorch.org/whl/cu118 pip install transformers==4.36.0 diffusers==0.25.0 accelerate==0.25.0 -

Check CUDA Compatibility: Ensure your PyTorch version matches your CUDA version

python -c "import torch; print(torch.version.cuda)" nvidia-smi # Check CUDA version

Model Download Interruptions

Large model downloads (8GB+) can fail due to network issues:

-

Use Resume Capability: Hugging Face CLI supports resuming interrupted downloads

huggingface-cli download kling-ai/kling-2.6 --local-dir ./models --resume-download -

Download in Parts: If resume doesn't work, download individual model components

huggingface-cli download kling-ai/kling-2.6 config.json --local-dir ./models huggingface-cli download kling-ai/kling-2.6 model.safetensors --local-dir ./models -

Use Mirror Sites: If the primary Hugging Face server is slow, try regional mirrors

export HF_ENDPOINT=https://hf-mirror.com huggingface-cli download kling-ai/kling-2.6 --local-dir ./models

Performance Optimization Tips

To improve generation speed and quality:

- Use TensorRT: Convert the model to TensorRT for faster inference (NVIDIA GPUs only)

- Batch Processing: Generate multiple videos in parallel if GPU memory allows

- Preload Model: Keep the model in memory between generations to avoid reload overhead

- Monitor GPU Usage: Use

nvidia-smi -l 1to monitor GPU utilization during generation

Pricing and Community Insights

Understanding the cost structure and community reception of Kling 2.6 is essential for making informed decisions about adoption and usage. The platform offers various pricing tiers designed to accommodate different user needs, from individual creators to enterprise customers.

Understanding the Pricing Structure

A common question among potential users is "Kling 2.6 is free?" The answer depends on your usage level and requirements. Kling 2.6 offers a free tier that provides limited access to the platform's capabilities, allowing users to experiment with basic features and generate a small number of videos each month. This tier is ideal for exploring the platform and determining if it meets your needs.

For more extensive usage, Kling 2.6 offers several paid subscription tiers with increasing limits on video generation, resolution, and access to premium features like advanced motion control and lip sync. Enterprise customers can negotiate custom pricing based on their specific requirements, including dedicated support, SLA guarantees, and integration assistance.

Credit System

Kling 2.6 operates on a flexible credit system. High-performance features like Professional Mode (1080p) and extended duration generation will consume more credits compared to Standard Mode. For the most accurate and up-to-date credit consumption rates, please refer to the real-time display on the generation dashboard.

Community discussions on platforms like Kling 2.6 free reddit provide valuable insights into real-world usage and cost-effectiveness. Many users report that the platform's pricing is competitive with similar tools, especially considering the advanced features and output quality. The consensus among power users is that Kling 2.6 offers good value for money, particularly for professional applications where output quality and control are critical.

Frequently Asked Questions

Q: Is Kling 2.6 free?

A: Kling 2.6 offers a free tier that allows users to generate up to 10 videos per month with basic features. However, according to discussions on Kling 2.6 free reddit, the free tier has limitations on video resolution (720p max) and doesn't include advanced features like lip sync or motion control. For serious content creation, most users recommend upgrading to the Pro tier ($29/month) which includes 1080p resolution, unlimited generations, and access to all premium features.

Q: Can I run Kling 2.6 on a 12GB VRAM GPU?

A: While the official recommendation is 16GB VRAM, it is possible to run Kling 2.6 on a 12GB GPU with some optimizations. You'll need to:

- Use mixed precision (FP16) inference

- Reduce the number of generated frames

- Lower the video resolution to 720p

- Enable gradient checkpointing

- Close other GPU-intensive applications

Performance will be slower, and you may experience longer generation times. For optimal results, consider upgrading to a 16GB+ GPU or using the cloud version.

Q: How to fix audio sync issues in Kling 2.6 lip sync?

A: Audio sync issues are usually caused by:

- Poor audio quality - Use clean, noise-free audio recordings

- Incorrect sample rate - Ensure audio is 16kHz or 44.1kHz

- Language mismatch - The lip sync model is optimized for English; other languages may have slight delays

- Complex speech patterns - Fast speech or multiple speakers can cause sync issues

To fix sync issues:

- Pre-process audio with noise reduction

- Use a consistent speaking pace

- Break long monologues into shorter segments

- Adjust the sync offset parameter in the Kling 2.6 interface (typically ranges from -5 to +5 frames)

Q: What's the difference between Kling 2.6 online and local deployment?

A: Kling 2.6 online offers convenience with no setup required, automatic updates, and cloud-based processing. Local deployment provides more control, privacy, and the ability to customize the model. Choose online for quick projects and local deployment for enterprise applications or research purposes.

Conclusion: Final Thoughts on Kling 2.6

Kling 2.6 represents a significant advancement in AI video generation technology, offering powerful features like motion control and lip sync that set new standards in the industry. The platform's combination of user-friendly interfaces and advanced capabilities makes it accessible to both casual users and professional content creators.

Whether you choose to use Kling 2.6 online for quick video generation or deploy it locally through Hugging Face integration for maximum control, the platform provides the tools needed to create high-quality AI-generated videos. The comprehensive Kling 2.6 documentation and active community support make it easier to get started and master the platform's features.

As AI video generation continues to evolve, Kling 2.6 stands out as a versatile and powerful solution that balances accessibility with professional-grade capabilities. Whether you're creating marketing content, educational materials, or entertainment videos, Kling 2.6 provides the features and flexibility needed to bring your vision to life.

Ready to experience the future of AI video generation? Visit our homepage to get started with Kling 2.6 today and discover how it can transform your video production workflow.

Z-Image Base vs Turbo: Mastering Chinese Text for Kling 2.6 Video

Learn how to use Z-Image Base and Turbo models to fix Chinese text rendering issues in Kling 2.6 videos. Complete workflow guide for commercial and artistic use cases.

How to Optimize Seedance 2.0 Costs: A Developer's Guide to 50% Savings

Master the economics of Seedance 2.0 with proven strategies to reduce API costs by 50%. Learn the 'Draft-Lock-Final' workflow and token optimization techniques.

Seedance 2.0 Pricing Revealed: Is the 1 RMB/Sec Cost the Death of Sora 2?

ByteDance's Seedance 2.0 pricing is here: 1 RMB per second for high-quality AI video. Discover how this cost structure challenges Sora 2 and reshapes the industry.

Kling 3.0 is Live: Native Audio & 15s Videos (Plus: ByteDance's Seedance 2.0 Arrives)

Major Update: Kling 3.0 is now live with native audio and 15s duration. Plus, we introduce ByteDance's Seedance 2.0, the new multimodal AI video beast. Try both today.

Kling 3.0 vs Runway Gen-4.5: The Ultimate AI Video Showdown (2026 Comparison)

A comprehensive 2026 comparison. We test Kling 3.0 vs Runway Gen-4.5 (Flagship) and Kling 2.6 vs Gen-4 (Standard). Discover which AI video generator offers the best daily free credits.

Why Seedance 2.0 Was Removed? The Truth Behind StormCrew's Video & Kling 3.0's Defeat

StormCrew's review caused a panic ban of Seedance 2.0. Discover why its 10x cost-effectiveness and distillation tech are crushing Kling 3.0.

Kling 3 vs Seedance 2: The Definitive Tech Report & Comparison (2026)

The era of random AI video is over. We compare the "Physics Engine" (Kling 3) against the "Narrative System" (Seedance 2). Which ecosystem rules 2026?

Seedance 2 Review: Is Jimeng 2.0 the End of "Gacha" AI Video?

Seedance 2 (Jimeng) is here with 4K resolution and revolutionary storyboard control. We test if Seedance2 finally solves the consistency problem for AI filmmakers.